How We Built the First AI Financial Advisor with Full-Context Reasoning Regulated by the SEC

New Baselines

Origin’s financial advisor AI now averages 98.3% when tested on CFP® sample exam questions (up from 96%), outperforming, in an internal study, the top human CFP®s (~85%) and the ~75% pass-rate baseline, demonstrating tangible proof of improved reasoning and mathematical accuracy.

These gains are driven by architectural upgrades, precision verification via live code execution (cent-level math vs. prior dollar-level), and tighter security and abstraction layers. These improvements build on expanded sample practice CFP® exam question training databases that Origin developed, a strategic migration from Google to OpenAI models for greater robustness and scalability, and a modular architecture that allows rapid evolution as LLMs advance. The system now leverages over 200 MCP tools orchestrated through an Anthropic-partnered abstraction layer that previews a glossary and expands only relevant tools, mitigating context rot and maintaining efficiency. Combined with zero-data-retention agreements and strict API-gated access that make PII exfiltration technically impossible, Origin’s infrastructure is purpose-built to evolve in lockstep with the frontier of trustworthy AI.

Introduction

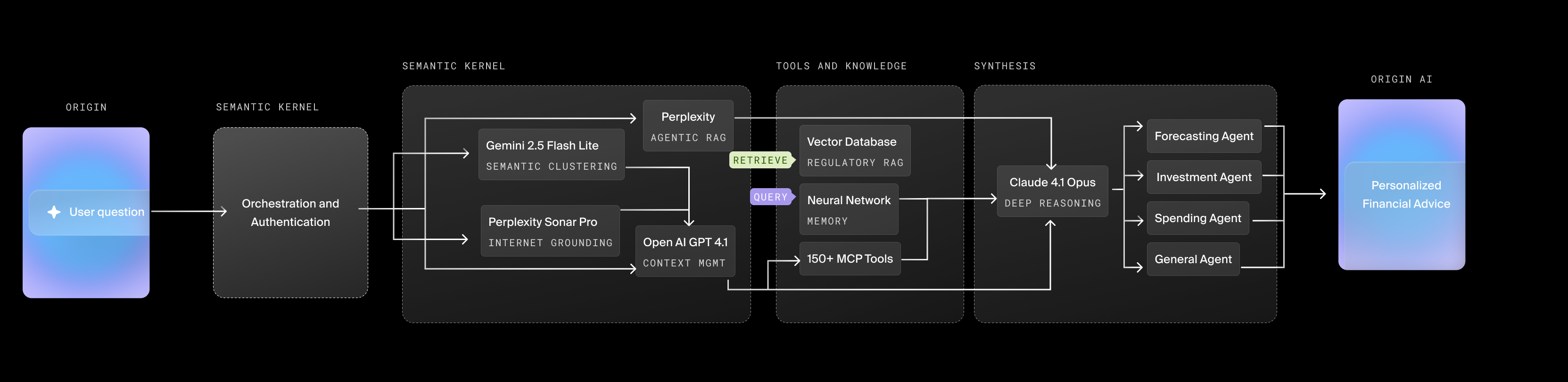

Origin has built the first AI financial advisor designed for contextual reasoning, combining a multi-agent architecture with leading LLMs (Claude 4.1 Opus, OpenAI GPT, Gemini 2.5 Flash, and Perplexity Sonar Pro) to deliver advisor-grade answers. Each query is routed to specialized agents and enriched with real-time market data and the user’s financial history, so responses are personalized, current, and context-aware. A compliance system vets every output against 100+ fiduciary, privacy, and accuracy standards. The result is the first AI platform capable of operating safely at scale in regulated financial domains — producing advisor-grade responses that outperform both humans and frontier LLMs on financial reasoning tasks.

LLMs can generate fluent answers, but they can also fail at financial reasoning. They can explain what an ETF is, but not whether your portfolio is overexposed to tech. Even more critically, they struggle with mathematical precision—a frontier model might confidently miscalculate portfolio allocation or compound interest, turning a reasonable strategy into costly advice. Because they don’t have access to your financial data, they either remain generic or try to collect everything from you directly. But when overloaded with too much context, they suffer from context rot — their reasoning can break down and become incoherent as more information is added. Human advisors excel at personalization but are costly, and sometimes can be scarce and inconsistent.

At Origin, our breakthrough was architecting a system that pairs the contextual reasoning of frontier LLMs with deterministic computational engines. The LLM layer interprets complex financial scenarios and orchestrates tasks, while deterministic modules handle the underlying math with absolute precision. This hybrid design eliminates the computational fragility of pure LLM systems, ensuring both strategic nuance and numerical accuracy. The result is an advisor that scales like AI, reasons like a human, and operates with the rigor required for regulatory compliance.

Technical System Overview

Origin’s AI Advisor is implemented as a multi-agent ensemble, coordinated by a central orchestration layer.

At the heart of the system is a Core Router, which classifies every user query and delegates it to domain-specialised agents. Each agent is tuned for a specific dimension of financial planning:

- Spending Agent: budgeting, cash flow optimization, expense analysis

- Invest Agent: portfolio construction, rebalancing, investment analysis

- Planning Agent: long-term scenarios for retirement, estate, and tax planning

This mirrors how full scale financial advisory teams work in practice — specialists collaborating on complex client needs — but here the orchestration is automated, consistent, and instant. In effect, we’re bringing the caliber of services historically reserved for the top 1% to everyone, at scale.

Core components of the ensemble: The advisor is a heterogeneous ensemble of LLMs coordinated by an orchestration layer. Each component plays a specialized role

- Claude 4.1 Opus (Anthropic): Primary reasoning agent. Handles multi-pillar analysis across domains, leveraging its long context window for multi-hop reasoning. Opus’s long context window allows the system to synthesize complex relationships, e.g., connecting an RSU vesting schedule to downstream tax implications.

- OpenAI GPT (via Model Context Protocol): The system’s technical backbone. Origin integrates with 150+ proprietary MCP tools—an order of magnitude beyond typical agent frameworks, which often cap at 10–128 tools for accuracy. This level of integration places Origin closer to advanced research benchmarks like LiveMCPBench (500+ tools) and MCPToolBench++ (4,000+ tools), showcasing a degree of tool richness and orchestration that few systems attempt, let alone sustain in production. By abstracting complex operations—such as retrieving tax tables or running Monte Carlo simulations—GPT can dynamically route requests to the right tool without bloating prompts.

- Gemini 2.5 (Google): Real-time grounding. Sub-second retrieval of market data, news, and analyst commentary. Essential for time-sensitive reasoning, like explaining how S&P movements impact your portfolio today versus last week.

- Perplexity Sonar Pro: Specialized retrieval backbone and Origin’s agentic RAG (Retrieval-Augmented Generation) system. It handles query decomposition and contextual linking across Origin-specific knowledge, ensuring the advisor can not only analyze your finances but also surface the right context to explain how to use Origin’s features effectively.

Pipeline: Every interaction follows a structured pipeline:

- Contextual Assembly: Securely retrieve relevant account balances, transactions, portfolio holdings, and live market data.

- Agent Routing: Orchestrator classifies query intent and selects relevant agents.

- Collaborative Reasoning: Models run in parallel or sequence — Claude for reasoning, GPT for tool execution, Gemini for real-time grounding.

- Compliance Gateway: Proprietary EVAL system runs 138 automated checks to validate outputs for numerical accuracy, suitability, disclosure compliance, and privacy.

- Audit Logging: Full end-to-end logging: query, data touched, model tokens, EVAL outcome. Encrypted, immutable, and retained for regulatory disclosure.

This design ensures that reasoning is both personalized and auditable — critical in financial domains where black-box answers are insufficient.

Primary Interfaces Powered by Origin’s AI

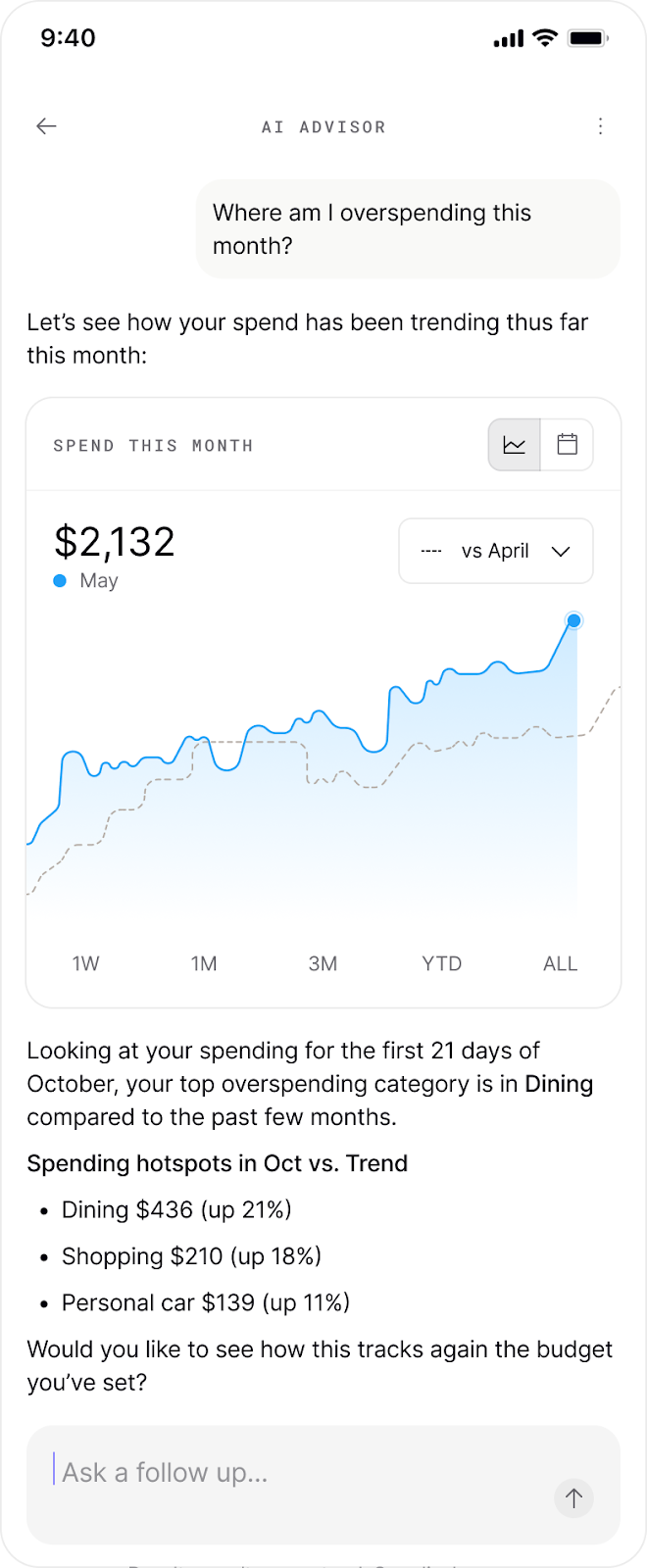

- Conversational Agent (AI Advisor): A stateful, context-aware chat interface. Memory isn’t just token history; it’s a structured context persisted across sessions. If you reclassify a category (e.g., “Uber = transportation”), that semantic adjustment is remembered and applied in future analyses. This design avoids the brute-force “stuff everything in the prompt” approach that causes context rot.

- Instant Insights: Tap into any card for localized explanations of every metric. For example, “Why is my net worth down this month?” triggers account retrieval, asset class breakdown, correlation with market events, and a structured explanation.

- Daily & Weekly Reports: Retrieval-augmented generation (RAG) pipelines. Daily Brief surfaces market events relevant to your holdings; Weekly Recap synthesizes spending, saving, and portfolio shifts. Perplexity Sonar contextualizes, Gemini provides live grounding, and compliance filters enforce factual accuracy.

Evaluation Methodology and Results

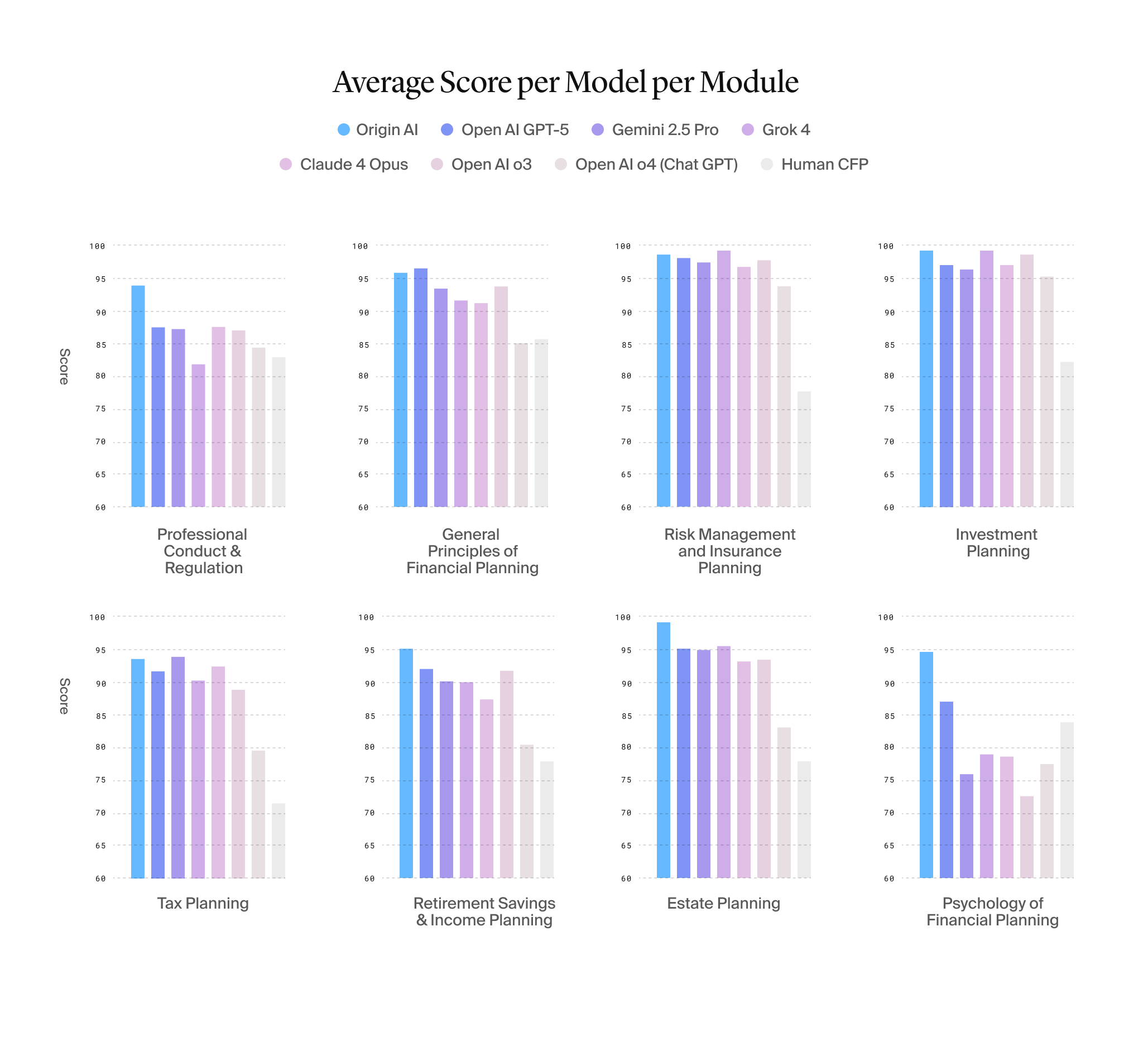

We benchmarked the advisor against a Certified Financial Planner® (CFP®) sample practice exam — the industry standard for human financial advisors. To ensure fairness and rigor, we held testing conditions constant across models: identical exam sets, randomized question orders, controlled prompts, and no access to external tools or retrieval. This ensured that results reflected reasoning ability rather than prompt engineering or memorization tricks.

Why Origin outpaces base LLMs

Unlike raw LLMs that improve only when their next version ships, Origin’s architecture compounds gains. Every upgrade to an underlying model makes our ensemble stronger, but the orchestration, domain-specific tuning, compliance guardrails, and multi-agent retrieval layer are what turn that raw capability into reliable financial advice. In other words: as LLMs get smarter, so do we — and Origin will always outpace the base model because our system learns, layers, and governs in ways a standalone LLM cannot.

Setup: 432 hours, 6,000 unique CFP® sample questions administered by Origin

Results:

- Origin: 98.3% average score

- Human CFP® average score: 79.5%

- GPT-5: 93.8% average score

- Gemini 2.5 Pro: 93.1% average score

- Grok 4 92.5% average score

- Claude 4 Opus: 91.4% average score

- OpenAI 4o: 85.4% average score

- Variance: Held between 95–97% across multiple runs. Other models showed drift and instability.

- Domain scores: Origin's AI Outperforms Leading LLMs and Human CFP® professionals Across All 8 CFP® sample exam modules—Reflecting the Consistency of a Well-Rounded Financial Advisor. Sample highlights and aggregate results below:

- Investment Planning: >98%

- Estate Planning: >99%

- Tax Planning: +22 points vs. humans

- Example test: When asked about RSUs at a non-public company, the model flagged the inconsistency and responded: “Are you sure? If the company isn’t public, you may have stock options instead.” This demonstrates context-aware reasoning — a weak spot for generic models.

Capabilities That Enable This

- Memory: Structured persistence across sessions. Financial state (transactions, classifications, portfolio changes) is retrieved selectively, not dumped wholesale, to minimize hallucinations.

- Reasoning: Multi-agent workflows combine Opus’s long-context reasoning, GPT’s tool orchestration, and structured simulators (Monte Carlo, tax engines).

- Real-time grounding: Gemini integrates live market data at inference. This allows for “up-to-the-minute” answers (e.g., why your portfolio moved today).

Design Considerations

- Context engineering vs. context rot: Only relevant slices of data are retrieved per query.

- Statefulness: Structured memory maintains continuity without ballooning context windows.

- Reliability: Consistent accuracy across evaluation runs, unlike single-model baselines.

- Compliance-first: Automated EVAL layer helps ensure outputs meet disclosure and privacy/compliance standards before surfacing.

- Security: TLS 1.3 in transit, AES-256-GCM at rest with AWS KMS envelope keys. SOC2 Type II certified. User history is retained for 12 months; audit logs 7 years. Enterprise agreements with our LLM providers enforce Zero Data Retention policies for maximum privacy.

Intended Use Cases and Workflows

The system is designed to reason across the same domains a human advisor would handle, but with richer state, broader market awareness, and real-time adaptability. Three representative use cases highlight how the architecture operates.

Spending analysis begins with a simple query like “Where am I overspending this month?” Instead of just surfacing a transaction list, the system parses intent, resolves the relevant timeframe, and pulls categorized data from linked accounts. Persistent memory ensures prior user corrections—like reclassifying Pilates as fitness—are applied automatically, keeping the data set clean. From there, it runs anomaly detection against the user’s historical baseline and peer spending patterns, isolating categories that deviate significantly. The explanation surfaces as plain language: dining spend is 42% higher than usual, or rideshare costs have increased due to higher trip frequency. In practice, this workflow turns a vague concern about “overspending” into a targeted, data-driven insight.

Investment optimization extends the architecture to portfolio management. A query like “Is my allocation optimized?” triggers a chain that retrieves the user’s current holdings and risk profile, scans live market signals from indices and analyst reports, and stress-tests the portfolio using Monte Carlo simulations. These results are reconciled against the user’s goals, such as retirement age or liquidity constraints, to determine whether the current allocation is aligned. The system then produces a recommendation in concrete terms: equities are overweighted relative to risk tolerance, reallocating 12% to fixed income would reduce volatility with minimal impact on expected return. Unlike generic LLMs that respond with broad principles like “diversify,” this workflow produces guidance that is situationally accurate and grounded in both user and market context.

Forecasting illustrates the long-horizon reasoning of the Forecaster Agent. A question like “Can I retire at 60?” leads to a layered analysis: income, expenses, and portfolio growth are modeled via Monte Carlo, while tax regimes, interest rate assumptions, and inflation scenarios are factored in. Lifestyle assumptions such as housing moves, education costs, or health care expenses are integrated directly from user data or added interactively. The agent maintains state so projections can be adjusted incrementally—adding a vacation home purchase, for example—without rebuilding the model from scratch. The output is probabilistic and explanatory: retiring at 60 has a 74% success rate under current assumptions, delaying two years raises this to 92%, and healthcare costs drive nearly one-third of forecast volatility. This workflow demonstrates how the system can translate open-ended life questions into rigorously modeled, regulator-ready financial projections.

Together, these use cases show how the architecture decomposes broad financial questions into structured reasoning processes and then reassembles them into contextualized answers. The outcome is not a dashboard of numbers, but explanations that reflect the user’s real situation, current market conditions, and regulatory guardrails.

Privacy and Compliance

- SOC 2 certified

- GDPR/CCPA compliant

- Read-only by design: cannot move funds or alter accounts

- Immutable audit trails for explainability and regulatory audit

Conclusion

Origin’s AI Advisor represents a breakthrough in applying AI to complex, regulated financial domains. Its heterogeneous, multi-agent architecture delivers persistent memory, orchestrated reasoning, and real-time grounding — enabling advisor-grade financial analysis at scale.

This work demonstrates a broader lesson: in complex, regulated domains, agentic orchestration beats monolithic LLMs. The right model isn’t a bigger model, but a system that retrieves the right context, routes tasks to specialized agents, and enforces compliance before output.

CFP® is a registered certification mark owned by Certified Financial Planner Board of Standards, Inc. Origin is not affiliated with, endorsed by, or certified by CFP Board. Past performance does not guarantee future results. Performance metrics, including those based on standardized test questions, do not or may not represent actual client results or guarantee future performance. Advisory services are offered through Origin Investment Advisory LLC ("Origin RIA"), a Registered Investment Adviser registered with the Securities and Exchange Commission. Origin AI Advisor is an AI-powered financial assistant that provides personalized financial advice. To receive personalized advice, users must first complete our suitability questionnaire (or AI Advisor is prohibited from delivering personalized advice to that user). Recommendations are based on algorithms and may contain errors or 'model hallucinations'—though significantly reduced through our multi-layered validation architecture: (1) 138-point automated compliance checks via our proprietary EVAL system that validates numerical accuracy and factual consistency, (2) selective context retrieval that minimizes context rot by only loading relevant data slices rather than dumping wholesale information, (3) heterogeneous LLM ensemble verification where multiple models cross-validate outputs via LLM-as-judge, (4) real-time grounding that anchors responses to current market data, and (5) structured memory persistence that maintains semantic consistency across sessions. All advice is non-discretionary, meaning users are responsible for deciding whether to act on it. The accuracy of the guidance depends on the accuracy of the data provided. Users are encouraged to consult with a human financial planner for significant decisions. For additional disclosures, visit useorigin.com/legal

Answers to your questions

Yes. Origin offers partner access so you can manage your finances together at no additional cost. You’ll be able to filter transactions by member—making it easy to see which spending is yours and which belongs to your partner.

Yes. You can edit existing transactions and add new ones directly in Origin, so your records stay accurate and personalized.

Origin connects securely through trusted partners including Plaid, MX, and Mastercard.

Yes. Origin supports CSV uploads. You can upload a .csv file of your transactions, and we’ll import them into your account.

Yes. Your data is protected with bank-level security and advanced encryption. When you connect accounts through Origin, your login credentials are never shared with us. Instead, our partners generate secure tokens that let Origin access only the data you authorize—keeping your personal information private while enabling personalized insights.

Yes. You have full control to organize your spending in Origin. Transactions are automatically categorized by Origin, but you can always edit categories, add your own tags, and filter transactions however you like—so your spending reflects the way you actually manage money.